Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

Avnet to Exhibit at the 2024 Embedded Vision Summit

05/09/2024 – PHOENIX – Avnet’s exhibit plans for the 2024 Embedded Vision Summit include new development kits supporting AI applications. The summit is the premier event for practical, deployable computer vision and edge AI, for product creators who want to bring visual intelligence to products. This year’s Summit will be May 21-23, in Santa Clara, California. This

Beyond Smart: The Rise of Generative AI Smartphones

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. From live translations to personalized content management — the new era of mobile intelligence It’s 2024, and generative artificial intelligence (AI) is finally in people’s hands. Literally. This year’s early slate of flagship smartphone releases is a

Lattice to Showcase Advanced Edge AI Solutions at Embedded Vision Summit 2024

May 08, 2024 04:00 PM Eastern Daylight Time–HILLSBORO, Ore.–(BUSINESS WIRE)–Lattice Semiconductor (NASDAQ: LSCC), the low power programmable leader, today announced that it will showcase its latest FPGA technology at Embedded Vision Summit 2024. The Lattice booth will feature industry-leading low power, small form factor FPGAs and application-specific solutions enabling advanced embedded vision, artificial intelligence, and

Outsight Wins the 2024 Airport Technology Excellence Award

May 8, 2024 – Outsight’s groundbreaking Spatial AI Software Platform, Shift, has received the 2024 Airport Technology Excellence Awards, an event aiming to celebrate the best and greatest achievements in the aviation industry. This award recognises Outsight’s role in pushing the boundaries of Spatial Intelligence and 3D perception technologies on the global stage, with its

Say It Again: ChatRTX Adds New AI Models, Features in Latest Update

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Plus, new AI-powered NVIDIA DLSS 3.5 with Ray Reconstruction enhances ray-traced mods in NVIDIA RTX Remix. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and

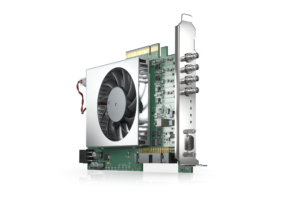

Basler Presents a New, Programmable CXP-12 Frame Grabber

With the imaFlex CXP-12 Quad, Basler AG is expanding its CXP-12 vision portfolio with a powerful, individually programmable frame grabber. Using the graphical FPGA development environment VisualApplets, application-specific image pre-processing and processing for high-end applications can be implemented on the frame grabber. Basler’s boost cameras, trigger boards, and cables combined with the card form a

Why CLIKA’s Auto Lightweight AI Toolkit is the Key to Unlocking Hardware-agnostic AI

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Recent advances in artificial intelligence (AI) research have democratized access to models like ChatGPT. While this is good news in that it has urged organizations and companies to start their own AI projects either to improve business

LLMs, MoE and NLP Take Center Stage: Key Insights From Qualcomm’s AI Summit 2023 On the Future of AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Experts at Microsoft, Duke and Stanford weigh in on the advancements and challenges of AI Qualcomm’s annual internal artificial intelligence (AI) Summit brought together industry experts and Qualcomm employees from over the world to San Diego in