Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

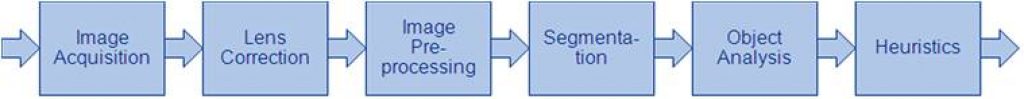

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

More than 500 AI Models Run Optimized on Intel Core Ultra Processors

Intel builds the PC industry’s most robust AI PC toolchain and presents an AI software foundation that developers can trust. What’s New: Today, Intel announced it surpassed 500 AI models running optimized on new Intel® Core™ Ultra processors – the industry’s premier AI PC processor available in the market today, featuring new AI experiences, immersive graphics

S32N Family of Scalable Processors Super-integrates Vehicle Functions for Tomorrow’s SDVs

This blog post was originally published at NXP Semiconductors’ website. It is reprinted here with the permission of NXP Semiconductors. To support the transition to future vehicles where capabilities and features are defined with software code, rather than with hardware boxes, a new approach to design them is required. NXP has announced the new S32N

Mixed Messages on MaaS Market Readiness: An Analysis of New Driverless Vehicle Testing Data From the California DMV

Over the last three to four years, the driverless robotaxi industry has begun to flourish. Driverless services are coming online in multiple cities across the US and China. IDTechEx‘s recent report, “Future Automotive Technologies 2024-2034: Applications, Megatrends, Forecasts“, predicts that the driverless robotaxi industry will be generating over US$470 billion annually through services in 2034.

Moving Pictures: Transform Images Into 3D Scenes With NVIDIA Instant NeRF

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Learn how the AI research project helps artists and others create 3D experiences from 2D images in seconds. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more

2024 Embedded Vision Summit Showcase: Keynote Presentation

Check out the keynote presentation “Learning to Understand Our Multimodal World with Minimal Supervision” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The field of computer vision is undergoing another profound change. Recently, “generalist” models have emerged that can solve a variety of visual perception tasks. Also known

2024 Embedded Vision Summit Showcase: Expert Panel Discussion

Check out the expert panel discussion “Multimodal LLMs at the Edge: Are We There Yet?” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The Summit is the premier conference for innovators incorporating computer vision and edge AI in products. It attracts a global audience of technology professionals from

2024 Embedded Vision Summit Showcase: Qualcomm General Session Presentation

Check out the general session presentation “What’s Next in On-Device Generative AI” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The generative AI era has begun! Large multimodal models are bringing the power of language understanding to machine perception, and transformer models are expanding to allow machines to

2024 Embedded Vision Summit Showcase: Network Optix General Session Presentation

Check out the general session presentation “Scaling Vision-Based Edge AI Solutions: From Prototype to Global Deployment” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The Embedded Vision Summit brings together innovators in silicon, devices, software and applications and empowers them to bring computer vision and perceptual AI into

Ambarella’s Latest 5nm AI SoC Family Runs Vision-language Models and AI-based Image Processing With Industry’s Lowest Power Consumption

CV75S SoCs Add CVflow® 3.0 AI Engine, USB 3.2 Connectivity and Dual Arm® A76 CPUs for Significantly Higher Performance in Security Cameras, Video Conferencing and Robotics SANTA CLARA, Calif., April 8, 2024 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced during the ISC West security expo, the continued expansion of its

Neusoft Reach and Ambarella Forge Strategic Partnership to Drive Advancements in Autonomous Driving and Intelligent Automotive Technology

SHANGHAI and SANTA CLARA, Calif., April 30, 2024 — Neusoft Reach Automotive Technology (Shanghai) Co., Ltd., a subsidiary of Neusoft specializing in intelligent vehicle technology, and Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, announced the establishment of a strategic partnership at the Beijing Auto Show. This cooperation is based on the strong resources

AI Chip Market to Grow 10x in the Next Ten Years and Become a $300 Billion Industry

The AI chip market has experienced impressive growth in recent years, driven by the surging demand for AI-powered technologies across industries. The global AI renaissance, which started last year, only fuelled the market growth, helping it to reach a staggering value in the following years. According to data presented by AltIndex.com, the global AI chip

Navigating the Future: How Avnet is Addressing Challenges in AMR Design

This blog post was originally published at Avnet’s website. It is reprinted here with the permission of Avnet. Autonomous mobile robots (AMRs) are revolutionizing industries such as manufacturing, logistics, agriculture, and healthcare by performing tasks that are too dangerous, tedious, or costly for humans. AMRs can navigate complex and dynamic environments, communicate with other devices

Embedded Vision Summit® Announces Full Conference Program for Edge AI and Computer Vision Innovators, May 21-23 in Santa Clara, California

The premier event for product creators incorporating computer vision and edge AI in products and applications SANTA CLARA, Calif., April 29, 2024 /PR Newswire/ — The Edge AI and Vision Alliance, a worldwide industry partnership, today announced the full program for the 2024 Embedded Vision Summit, taking place May 21-23 at the Santa Clara Convention

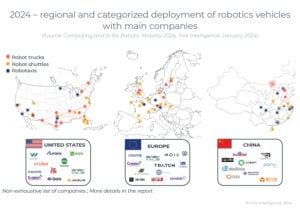

Decoding the Dynamics of Processors in Robotaxis, Robot Shuttles and Robot Trucks from $14M in 2023 to $1B in 2034: Which Strategy Will Baidu-Apollo, Cruise, Waymo, and AutoX Adopt?

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. $1B market is expected for robotic vehicle processors in 2034 The global market for robotic vehicles is led by the United States and China, with Europe following closely. Robotaxi services are thriving

Tesla’s Robotaxi Surprise: What You Need to Know

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Level 4 autonomous driving would be difficult to implement with the EV manufacturer’s current camera-only strategy. Elon Musk’s announcement that Tesla hold a robotaxi unveiling on 8/8 was surprising, as the company

Unleashing the Potential for Assisted and Automated Driving Experiences Through Scalability

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Working within an ecosystem of innovators and suppliers is paramount to addressing the challenge of building a scalable ADAS solution While the recent sentiment around fully autonomous vehicles is not overly positive, more and more vehicles on

The Building Blocks of AI: Decoding the Role and Significance of Foundation Models

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. These neural networks, trained on large volumes of data, power the applications driving the generative AI revolution. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible,

NIST Advanced Packaging Summit

Semiconductor packaging can be incredibly boring. That said, advanced packaging is a key to every high-complexity semiconductor – both on and off the planet. Nvidia, AMD, and Intel have all utilized chiplets and advanced packages for their latest AI/ML accelerators and processors. Without 2.5D and 3D design and assembly techniques, these and many other essential

Turbocharging AI During CES

This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. Innovation was the focus of our exhibition during this year’s CES, with the theme of Turbocharged AI. Over the course of four days, we held more than 300 customer meetings that showcased nearly 40 demonstrations and customer

DEEPX Expands First-generation AI Chips into Intelligent Security and Video Analytics Markets

Award-winning on-device AI chipmaker will pursue key industry partnerships to bolster this expansion; following a strong showing at ISC West, it will continue this momentum at Secutech Taipei, Embedded Vision Summit, and COMPUTEX 2024. TAIPEI and SEOUL, South Korea, April 23, 2024 /PRNewswire/ — DEEPX, an on-device AI semiconductor company, is announcing plans to expand