Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

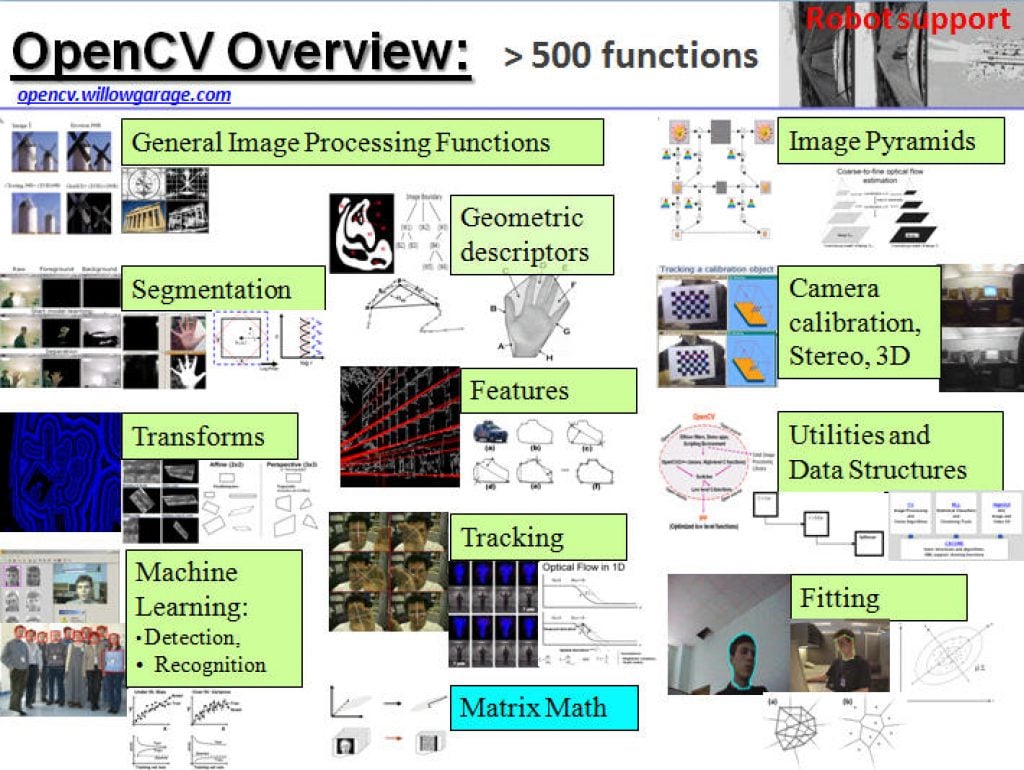

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

AI Chips and Chat GPT: Exploring AI and Robotics

AI chips can empower the intelligence of robotics, with future potential for smarter and more independent cars and robots. Alongside the uses of Chat GPT and chatting with robots at home, the potential for this technology to enhance working environments and reinvent socializing is promising. Cars that can judge the difference between people and signposts

The Rising Threat of Deepfakes: A Ticking Time Bomb for Society

In an era where reality seems increasingly malleable, the emergence of deepfake technology has catapulted us into uncharted territory. Deepfakes, synthetic digital media generated by artificial intelligence to manipulate images, audio, or video, pose a profound threat to the fabric of truth and trust in our society. The allure of deepfake technology lies in its

Efficiently Packing Neural Network AI Model for the Edge

This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva. Packing applications into constrained on-chip memory is a familiar problem in embedded design, and is now equally important in compacting neural network AI models into a constrained storage. In some ways this problem is even more challenging

AI Decoded From GTC: The Latest Developer Tools and Apps Accelerating AI on PC and Workstation

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Next Chat with RTX features showcased, TensorRT-LLM ecosystem grows, AI Workbench general availability, and NVIDIA NIM microservices launched. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more

The Rise of Generative AI: A Timeline of Breakthrough Innovations

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Explore the most pivotal advancements that shaped the landscape of generative AI From Alan Turing’s pioneering work to the cutting-edge transformers of the present, the field of generative artificial intelligence (AI) has witnessed remarkable breakthroughs — and

Qualcomm Announces Breakthrough Wi-Fi Technology and Introduces New AI-ready IoT and Industrial Platforms at Embedded World 2024

Highlights: Qualcomm Technologies’ leadership in connectivity, high performance, low power processing, and on-device AI position it at the center of the digital transformation of many industries. Introducing new industrial and embedded AI platforms, as well as micro-power Wi-Fi SoC—helping to enable intelligent computing everywhere. The Company is looking to expand its portfolio to address the

Arm Accelerates Edge AI with Latest Generation Ethos-U NPU and New IoT Reference Design Platform

News highlights: New Arm Ethos-U85 NPU delivers 4x performance uplift for high performance edge AI applications such as factory automation and commercial or smart home cameras New Arm IoT Reference Design Platform, Corstone-320, brings together leading-edge embedded IP with virtual hardware to accelerate deployment of voice, audio, and vision systems With a global ecosystem of

What Changed From VCRs to Software-defined Vision?

The Edge AI and Vision Alliance’s Jeff Bier discusses how advances in embedded systems’ basic building blocks are fundamentally changing their nature. The Ojo-Yoshida Report is launching the second in our Dig Deeper video podcast series, this issue focusing on “Embedded Basics.” Our guest for the inaugural episode is Jeff Bier, founder, Edge AI and Embedded Vision

Viking 7B/13B/33B: Sailing the Nordic Seas of Multilinguality

Europe’s largest private AI lab, Silo AI, is committed to strengthening European digital sovereignty and democratizing access to European large language models (LLMs). Together with University of Turku’s research group TurkuNLP and HPLT, Silo AI releases the first checkpoints of Viking, an open LLM for all Nordic languages, English and programming languages. Viking provides models

How to Enable Efficient Generative AI for Images and Videos

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Find out how Qualcomm AI Research reduced the latency of generative AI Generative artificial intelligence (AI) is rapidly changing the way we create images, videos, and even three-dimensional (3D) content. Leveraging the power of machine learning, generative

AI Decoded: Demystifying AI and the Hardware, Software and Tools That Power It

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. RTX AI PCs and workstations deliver exclusive AI capabilities and peak performance for gamers, creators, developers and everyday PC users. With the 2018 launch of RTX technologies and the first consumer GPU built for AI — GeForce

Where Nvidia’s Huang Went Wrong on Thomas Edison vs. AI

The tech industry’s self-congratulatory analogy of AI with electricity “is not absurd, but it’s too flawed to be useful,” says historian Peter Norton. What’s at stake: In Silicon Valley, power prevails. In the short run, if your business and technology have the power to make money, move the market, and change the rules, you won’t

Unlocking Peak Generations: TensorRT Accelerates AI on RTX PCs and Workstations

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. TensorRT extension for Stable Diffusion WebUI now supports ControlNets, performance showcased in new benchmark. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and which showcases

What is an NPU, and Why is It Key to Unlocking On-device Generative AI?

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The NPU is built for AI and complements the other processors to accelerate generative AI experiences The generative artificial intelligence (AI) revolution is here. With the growing demand for generative AI use cases across verticals with diverse

Lemur Imaging Demonstration of High Quality Compression Compared to Standard Quantization Techniques

Noman Hashim, CEO of Lemur Imaging, demonstrates the company’s latest edge AI and vision technologies and products at the March 2024 Edge AI and Vision Alliance Forum. Specifically, Hashim demonstrates Lemur Imaging’s high-quality memory reduction (LMR) image compression technology on different networks, showing that compression outperforms quantization in edge AI subsystems. The image quality delivered

Enabling the Generative AI Revolution with Intelligent Computing Everywhere

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. This post originally appeared on World Economic Forum on January 15, 2024. Generative artificial intelligence is era-defining and could benefit the global economy to the tune of $2.6 to $4.4 trillion annually. To realize the full potential of