Home

The premier conference for innovators incorporating computer vision and edge AI in products.

The premier conference for innovators incorporating computer vision and edge AI in products.

The Summit attracts a global audience of technology professionals from companies developing computer vision and edge AI-enabled products including embedded systems, cloud solutions and mobile applications.

Why Attend? It's a First-Rate Program with Powerful Insights into Practical Perceptual AI.

Join us for three days of learning—from tutorials to Deep-Dive Day, covering the latest technical insights, business trends and vision technologies—all with a focus on practical, deployable computer vision and visual/perceptual AI. The Summit connects the theories from great academic conferences, like CVPR, to the concrete needs of innovators building real-world products.

Four Reasons the Embedded Vision Summit is Different from Other Conferences

Most conferences are run by media or marketing companies whose specialty is, well, running as many conferences as they can—usually without any particular expertise in the subject matter.

Not us.

The Embedded Vision Summit is run by BDTI and the Edge AI and Vision Alliance. It’s the only conference we run. Our whole focus is the technology and business of edge AI and vision—it’s what we do, every day. For 30 years, BDTI’s engineers have designed, implemented and optimized algorithms on embedded systems, and for the last decade, our primary focus has been AI and computer vision at the edge.

We bring that expertise to the Summit each year. When we put together the Summit, we sweat every detail, asking ourselves, “If I were an attendee, what would I need to know? What critical questions do I need answered?” And we set about recruiting the best speakers with the most relevant expertise to answer those questions.

In essence: We build the conference that we’d like to attend, as technology and business innovators in this field.

Our target audience is people incorporating computer vision, perception and edge AI into products. They’re a practical bunch; they want to know what they can do today to build those products, and what’s coming tomorrow for next year’s products. We’re right there with them, and that’s the conference we’ve created.

This year will mark the 13th year of the Embedded Vision Summit. We’ve come a long way from our first event with a handful of talks and some demos in a hotel lobby in 2012. Over the years, we’ve learned a thing or two about delivering a high-quality conference for our attendees.

Every year we survey our attendees and 99% of last year’s attendees say they’d recommend this event to a colleague. <blush>

Learn

In order to deliver real-world insights and know-how into the most current topics in the industry to our attendees, we have packed the program with learning opportunities, including:

Speakers and Sessions: Learn from the best and listen to over 100 expert speakers who actively work in the industry present on the latest applications, techniques, technologies and opportunities in computer vision and visual AI.

See

100+ Exhibitors and Hundreds of Demos: Visit the exhibitors on the exhibit floor and see their latest technologies that enable perception—processors, algorithms, software, sensors, developer tools, services and more! You’ll get to see technology in action by watching cool demos from building-block component suppliers to learn about new and existing solutions to your business and engineering challenges.

Enabling Technologies Track: If you’re a product developer, engineer or business leader looking for real-world, commercial technologies that will help you add visual intelligence and AI to your new products, you’ll want to attend this track’s sessions. You can see things, like:

- Processors and tools for deep-learning-based object recognition.

- Optimized software components for low-cost, energy-efficient, real-time vision.

- Frameworks and services for faster product development.

2024 Keynote

The field of computer vision is witnessing a transformative shift with the advent of versatile “generalist” models trained on vast, unlabeled datasets, displaying adaptability to new tasks with minimal supervision. In the 2024 keynote, Professor Lee will present groundbreaking research on creating intelligent systems that comprehend our multimodal world with minimal human supervision, using images and text—and other modalities like video, audio and LiDAR. Attendees will gain insights into efficiently repurposing existing foundation models for specialized tasks, addressing training cost challenges and unlocking the potential of multimodal machine perception in diverse applications.

Meet the Newest Speakers Added to the Program!

Learn more about our top-rated speakers and see what new insights in computer vision and visual AI they’re bringing to the Summit by clicking on their photo.

Connect

Building valuable business connections is crucial. At the Summit you can connect with engineers, marketers, executives—they’re all here! Here’s how:

- Q&A Sessions: Join in on post-talk Q&A sessions and have your important questions answered directly by the expert speakers.

- Meet the Exhibitors and Sponsors: Connect with key engineers and technologists from top computer vision building-block suppliers to learn about their latest processors, software, development tools and more.

- Mingle at the Technology Exhibits Reception. The evening of Wednesday, May 22, enjoy great food and refreshing beverages while meeting new folks and seeing awesome tech demos at our Exhibits Reception!

Who Attends the Embedded Vision Summit?

More than 1,500 decision-makers, including engineers, engineering managers and executives from companies like these!

Hear What They Had to Say ...

It was awesome.

Senior Researcher

-General Motors

It’s the one conference I attend every year.

R&D Engineering Manager

-Kroger

The three important events for us are Mobile World Congress, CES, and the Embedded Vision Summit.

Director of Marketing

-Cadence

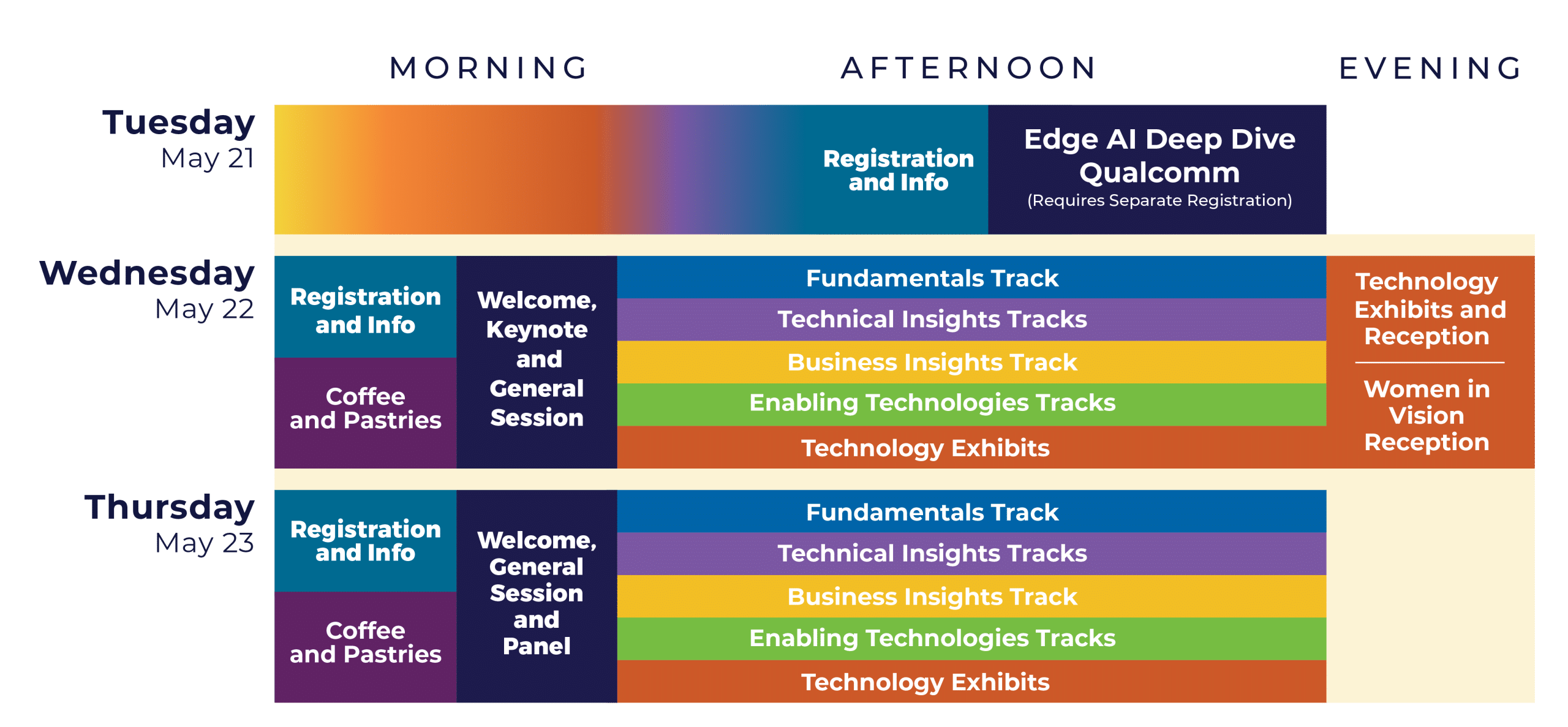

Summit 2024 Schedule at a Glance

Becoming a Sponsor or Exhibitor

Meet your newest, best leads.

Sponsoring or exhibiting is an amazing opportunity to engage with a uniquely qualified audience. Your company can be an integral part of the only global event dedicated to enabling product creators to harness computer vision and visual AI for practical applications.

The Summit brings together the most influential members of the global computer vision industry, including 1,000+ product and application developers, engineers, business leaders, top-level executives, industry visionaries, start-up innovators and more.

In 2023, 92% of attendees said they connected with potential suppliers or customers at the Summit!

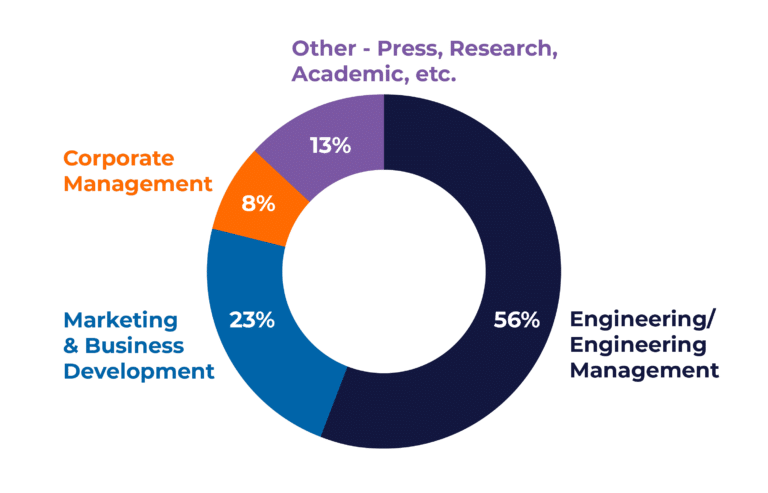

Summit Attendees by Role

Sign Up to Get the Latest Updates on the Embedded Vision Summit Program

Learn about the newest speakers, sessions and other noteworthy details about the Summit Program by leaving us a few details.

See you May 21-23, 2024, at the Santa Clara Convention Center!

-

-

Sponsors & Exhibitors

Interested in sponsoring or exhibiting?

The Embedded Vision Summit gives you unique access to the best qualified technology buyers you’ll ever meet. -

Get in Touch

Want to contact us?

Use the small blue chat widget in the lower right-hand corner of your screen, or the form linked below.STAY CONNECTED

Follow us on Twitter and LinkedIn.