This article was originally published at Texas Instruments' website. It is reprinted here with the permission of Texas Instruments.

Vehicles capable of autonomous operation are in the early stages of development today for use on the roads in the near future. To move self-driving cars from vision to reality, auto manufacturers depend on enabling electronic technologies for sensing, sensor fusion, communications, high-performance processing and other functions. Autonomous vehicle control systems will have to be scalable to accommodate a multi-year evolution as car models change and data loads increase with additional features and improved sensors. Innovations that can result in scalability include the use of distributed processing and localized sensor fusion.

Texas Instruments understands these requirements, based on its automotive expertise and innovative solutions that address the full range of automotive systems needs. TI also conducts its own autonomous vehicle research to help car makers find scalable solutions for systems that will perform well today and in the future.

One of the most exciting technology advances today is development of automobiles that can control themselves in certain situations and, ultimately, will drive themselves with minimal or no human assistance. Auto makers continually announce their plans for introducing automated features in upcoming models, and the industry estimates that fully self-driving vehicles will be available in less than a decade. Some vehicles that are currently available offer automated monitoring and warning features. Some are even capable of self-control in certain limited situations. Looking ahead, semi-autonomous, then fully autonomous vehicles will be phased in and driven on the roads along with traditional vehicles. Eventually, and sooner than we can easily realize, all new automobiles will be able to drive themselves, changing our lives almost as dramatically as the earliest cars impacted the lives of our ancestors.

These innovations are the result of advanced electronics that can sense, recognize, decide and act upon changes in the road environment. Auto makers, as they introduce new automated features, will face the usual factors that affect decisions about electronic systems and components: performance, size, cost, power requirements, reliability, availability and support. Add to these the importance of scalability, since systems will have to evolve from year to year, along with car model changes that bring feature additions and improvements in sensing technology. To stay on track in offering new automated capabilities, car makers will rely on technology suppliers whose scalable solutions offer the best options for balancing among all these requirements in the overall system design.

Benefits, timing of autonomous vehicle operation

Three words express the advantages offered by vehicle automation: safety, convenience and efficiency. Autonomous vehicle control will aid in eliminating many of the human errors that cause most accidents, helping to save lives, reduce injuries and minimize property damage. In addition, cars that drive themselves can chauffeur children, the elderly and disabled, free drivers to do other things while traveling, or even appear where and when needed without a human driver. Autonomous operation will also be more fuel-efficient and allow more cars to travel safely together on busy roads, saving on energy and infrastructure costs.

Of all the benefits, safety has been the top priority and is supported by many of the initial automated feature offerings. Termed Advanced Driver Assistance Systems (ADAS), these features are designed to help drivers avoid mistakes and, therefore, save lives in the near term. ADAS features will also serve as important elements of fully autonomous operation in the future.

The introduction of automated driving features will happen in phases and with increasing levels of autonomy. Using the National Highway Transportation Safety Administration definitions, these levels include:

- Level 0 (no automation): In these vehicles, the driver is in full control at all times.

- Level 1 (function-specific automation): The vehicle takes control of one more more vehicle functions, such as dynamic stability control systems. Most modern vehicles fall into this.

- Level 2 (combined function automation): This involves automation of at least two primary functions. For example, some high-end vehicles offer active cruise control and lane keeping, working in conjunction, which would classify them as level two.

- Level 3 (limited self-driving automation): The vehicle is capable of full self-driving operation in certain conditions, and the driver is expected to be available to take over control if needed.

- Level 4 (full self-driving automation): The vehicle is in full control at all times and is capable of operation, even without a driver present.

Because of the initial relatively high cost of automated driving technologies, these features are introduced in higher-end vehicles first, but are expected to migrate soon to mid-range and economy cars. More about the phases of introduction can be found in the TI white paper Making Cars Safer Through Technology Innovation, which also discusses many of the challenges facing the automotive industry and the larger society as we transition to fully autonomous driving.

Advanced ICs provide the enabling technology

Automated features, during every phase of introduction, are based on many components, including electronic sensors that capture information about the car’s environment; sensor analog front end (AFE) devices that convert real-world data from analog to digital data; integrated circuits (ICs) for communications; and high-performance microprocessors that analyze the massive amount of sensor data, extract high-level meaning, and make decisions about what the vehicle should do. Add microcontrollers (MCUs) to activate and control brakes, steering and other mechanical functions, plus power management devices for all circuitry, and it becomes evident how much autonomous vehicle operation is dependent on advanced electronic solutions.

When selecting electronics to fulfill various functions, auto system designers must not only consider performance and price, but also how well the components fit into a scalable system. As the phased introduction of vehicle automation indicates, the more completely autonomous systems that appear later on will be built using previously introduced automated functions. For instance, surround-view cameras that are used today for park assist will be integrated eventually into the overall sensing and control system for the final phase of fully autonomous driving. Along the way, surround-view cameras will be joined by radar and 3D scanning lidar (LIght Detection and Ranging) sensors that provide complementary information about what is around the vehicle. In addition to offering complementary 3D information to cameras, radar and lidar sensors are more robust under severe weather conditions. Thus, over time, the automated sensing and control system will grow more complex, integrated and effective.

The increased use of complementary sensors (such as camera, radar and lidar) shows one reason that electronic systems should be scalable, because scalability easily allows the addition of new sensors to the system. Another reason is that the individual sensors will improve over time, and will require more communications and processing bandwidth.

Cameras provide an obvious example to address the growing need for bandwidth. Cameras also play a pivotal role in sensing systems because today they are the most effective sensors for analyzing data meant for human consumption. Cameras provide an enormous data stream for communications and processing, and future increases in image resolution will magnify the load. To handle these load increases effectively, the system must be designed from the start to support rescaling.

Processing sensor inputs

In practical terms, system scaling depends on where and how the various levels of processing are performed. Figure 1 shows a functional view of the data flow in a fully equipped sensing and control system for an autonomous vehicle. At the left are the input sensors, including global positioning (GPS), inertial measurement unit (IMU), cameras, lidar, radar and ultrasound. Each sensor has a certain amount of dedicated sensor processing that processes raw data in order to create an object representation that can be used by the next stage in a hierarchical fusion system.

Figure 1. A functional view of the data flow in an autonomous car’s sensing and control system.

The conceptual view shown in the figure comprehends different types of sensor fusion occurring at various levels. For instance, raw data from a pair of cameras can be fused to extract depth information, a process known as stereo vision. Likewise, data from sensors of different modalities, but with overlapping fields of view, can be fused locally to improve the tasks of object detection and classification.

Object representation provided by on-board sensors, whether originated from a single sensor or via fusion of two or more sensors, is combined with additional information from nearby vehicles and the infrastructure itself. This information comes from dedicated short-range communication (DSRC), also referred to as vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communications. On-board maps and associated cloud-based systems offer additional inputs via cellular communications.

The outputs from all the sensor blocks are used to produce a 3D map of the environment around the vehicle. The map includes curbs and lane markers, vehicles, pedestrians, street signs and traffic lights, the car’s position in a larger map of the area and other items that must be recognized for safe driving. This information is used by an “action engine,” which serves as the decision maker for the entire system. The action engine determines what the car needs to do and sends activation signals to the lock-step, dual-core MCUs controlling the car’s mechanical functions and messages to the driver. Other inputs come from sensors within the car that monitor the state of the driver, in case there is a need for an emergency override of the rest of the system.

Finally, it is important to inform the driver visually about what the car “understands” of its environment. Displays that help the driver visualize the car and its environment can warn about road conditions and play a role in gaining acceptance of new technology. For instance, when drivers can see a 3D map that the vehicle uses for its operations, they will become more confident about the vehicle’s automated control, and begin to rely on it.

Algorithms and system scaling

With its heavy reliance on cameras, radar, lidar and other sensors, autonomous vehicle control requires a great deal of high-performance processing, which by nature is heterogeneous. Low-level sensor processing, which handles massive amounts of input data, tends to use relatively simple repetitive algorithms that operate in parallel. High-level fusion processing has comparatively little data but complicated algorithms. Various algorithms are optimally implemented by different processing architectures, including SIMD (single-instruction, multiple data), VLIW (very long instruction word), and RISC (reduced instruction set computing) processor types. These architectures may also be aided in performing specific functions by hard- coded hardware accelerators.

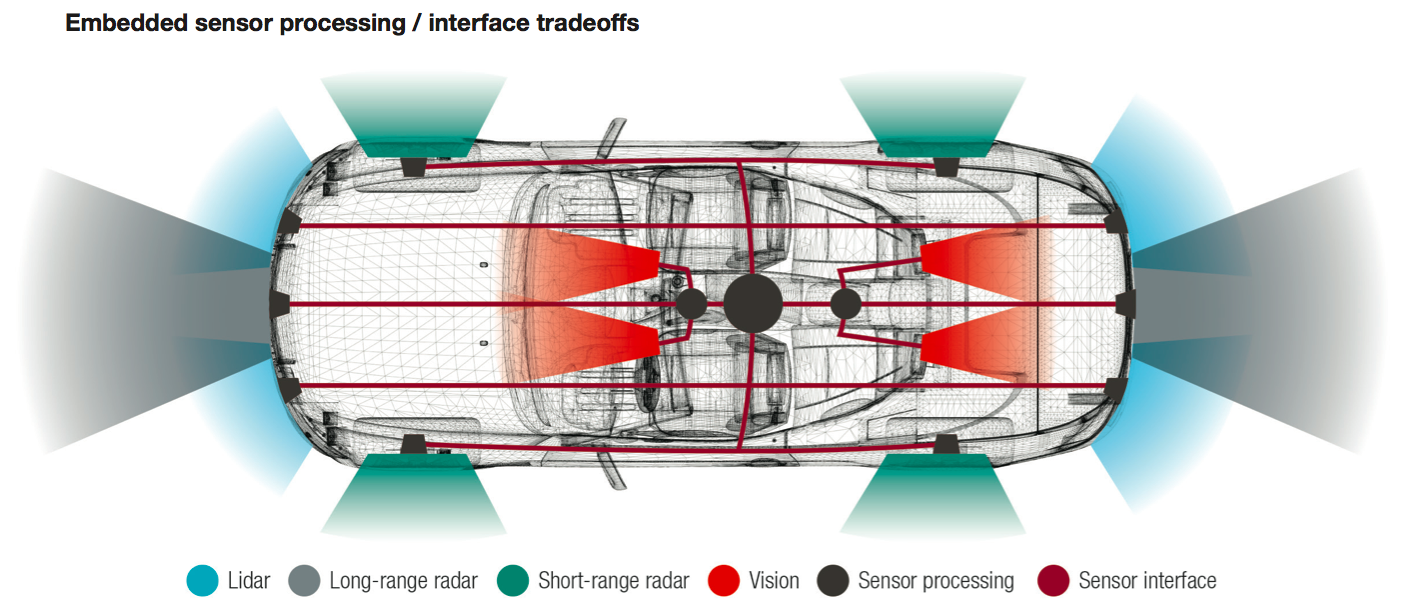

The multi-level, heterogeneous processing demanded by autonomous vehicle operation is well supported by hierarchical distributed processing, in which sensor processing is performed separately from the final-stage fusion and action engine. Figure 2 shows an example of a car with the sensor processing fully distributed near the sensors at the vehicle periphery. All of these feed outputs to the final-stage fusion and action processor that decides what the car should do and issues commands to the vehicle’s mechanical parts.

Figure 2. In the future autonomous car, sensor processing will occur throughout the car’s periphery to send signals and commands to the car’s mechanical parts.

The alternative to the distributed processing scheme shown is a fully centralized architecture, where all the processing takes place in a single multicore unit.

A system based on fully centralized processing depends on the high-speed transfer of vast amounts of data from all the sensors. Communications and processing not only have to be adequate for the current year’s model, but must build in a great deal of headroom in the initial design to accommodate sensor additions and improvements in later years. By contrast, in a distributed architecture, the sensor resolution can increase without significantly affecting the bandwidth requirements to communicate the resulting object representation.

Sensor fusion—processing that forms a composite understanding from two or more complementary sensors—can take place in either a distributed or centralized system. Usually the sensors being fused are close together, perhaps housed in the same unit, so that there is minimal need for high-speed communications over a wide area. The addition of radar and lidar can actually diminish the requirements for camera processing because radar and lidar provide richer, more accurate 3D information that facilitates the detection and classification of objects.

TI research and development for autonomous vehicles

TI has a decades-long relationship with the automotive industry, giving the company valuable expertise to create the technology needed by auto manufacturers and unique foresight into the challenges ahead. Recognizing that ADAS and self- driving features are evolving rapidly, the company devotes a significant amount of effort to identify and develop solutions to enable autonomous vehicles. TI development includes all aspects of sensing subsystems and control integration, with methodologies to analyze data in real-time.

A great deal of TI research is directed at extending the technology that will be used in autonomous vehicles. The importance of lidar as a vehicle sensing technology is undisputed, but to date lidar has been too large and costly to consider for wide adoption— a problem TI proposes to address. Other areas of research that will prove beneficial to car makers include ultrasound, high-speed data communications for sensor fusion applications, and enhancements within the vehicle sensing and user interfaces.

TI development also continues across the full spectrum of analog and embedded processing ICs required to support automotive electronics, including autonomous vehicle operation. An extensive portfolio of power management ICs, sensor signal conditioning, interfaces and transceivers support the signal chain and power supply. Besides supplying sensor technology for the outside of the car, TI offers ultrasound sensors that can be used in the cabin, and inductive and capacitive sensors in the steering wheel, to provide information about the state of the driver. TI’s DLP® wide field of view heads-up display technology is well suited to provide display capabilities that can help drivers see what the car “knows” about the road and keep them engaged while monitoring vehicle operation, if needed.

In addition, advanced, heterogeneous processing, along with the necessary foundation software, promote rapid algorithm and application development. For example, TI’s TDA2x system- on-chip (SoC) technology, spans this range with a programmable Vision AccelerationPac containing Embedded Vision Engines (EVEs) that are specialized for handling the massive data of video systems, ISP (image signal processor) for camera preprocessing, DSP (digital signal processor) for more general signal processing, and RISC options that include several ARM® processors. In addition to its own software frameworks, TI is a key contributor to the Khronos OpenVX standard for computer vision acceleration that addresses the need for low-power, high-performance processing on embedded heterogeneous processors. The system solution is scalable throughout TI’s SoC portfolio, including MCUs, multicore DSPs and heterogeneous vision processors.

Today’s investment in future transportation

The stakes are high for auto makers worldwide, as they race to see who can introduce the most successful implementations of ADAS and autonomous vehicle operation. The phased introduction of automated features, and ongoing improvements in sensors, suggest that the electronic systems will have to be designed for scalability. Other factors influencing systems design are the fusion of complementary sensor data to provide a better 3D map of the car and its environment, the availability of low-cost lidar sensors, and other improvements in enabling hardware and software.

Summary

TI recognizes the challenges that auto manufacturers face as they strive to make self- driving cars a reality. The company invests a large amount of research in learning what is involved in autonomous vehicle operation in order to design the right solutions for auto makers’ needs. This research complements TI’s extensive portfolio of leading solutions, along with the company’s strengths in worldwide manufacturing and support. TI technology will continue to play an important role in helping automotive manufacturers implement ADAS safety features that are being phased in today, and helping them to enable the self-driving cars of tomorrow.

For more information:

- Visit our website: www.ti.com/corp-ino-auto-wp-lp

- Read our related white paper on automotive safety: www.ti.com/corp-ino-auto-wp-mc

- Watch our ADAS whiteboard video: www.ti.com/corp-ino-auto-wp-v

By Fernando Mujica, Ph.D.

Director, Autonomous Vehicles R&D Kilby Labs, Texas Instruments