By Brian Dipert

Editor-In-Chief

Embedded Vision Alliance

Senior Analyst

BDTI

The Embedded Vision Alliance held its second quarterly Member Summit on December 6 in Dallas, TX, sponsored by Texas Instruments, and following up the premier event back in September. One notable aspect of the December meeting, as I previewed back in early November, was the keynote conducted by Cernium's CTO Nik Gagvani. Gagvani's talk was both entertaining and informative, and attendee reviews revealed it to be a highlight of the day's activities.

Gagvani obtained a B.S. in Aerospace Engineering from the Indian Institute of Technology in Kharagpur, followed by a Ph.D. in Computer Engineering from Rutgers. His professional career began with a short stint as lead technical architect at Access Edge, followed by nine years in various technical managerial positions at Sarnoff Corporation and a subsidiary, Pyramid Vision. At the time of his keynote, Gagvani had been with Cernium for six years.

Gagvani began his talk with one of my favorite quotes, from science fiction writer Arthur C. Clarke:

Any sufficiently advanced technology is indistinguishable from magic.

His four-point "Magic of Embedded Vision" summary of the business opportunity wrapped up with a conclusion that I wholeheartedly support:

- The number of active cameras in use will exceed the human population over the next three years.

- The vast majority of these [cameras] will be interfaced with an embedded processor.

- A large fraction of these processors will be capable of running embedded vision applications.

- Embedded vision has the potential to be the magic behind hundreds of innovative products!

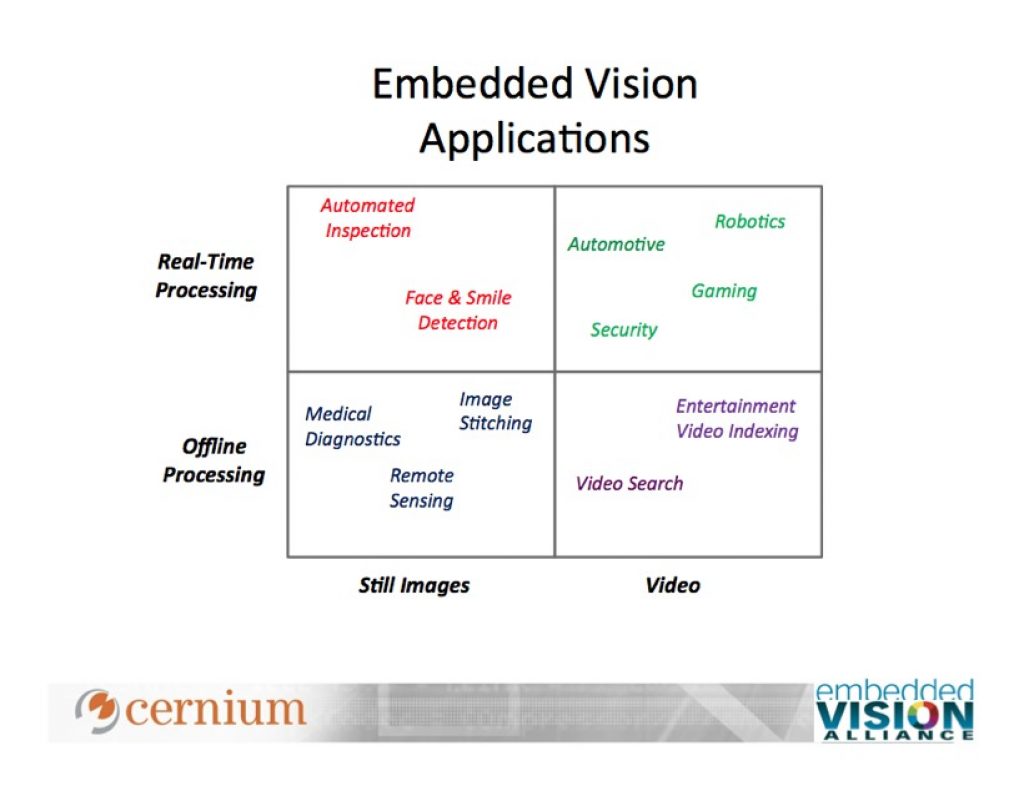

Next, Gagvan neatly subdivides the embedded vision opportunity into four quadrants, differentiated by still versus video image capture, and real-time versus offline image processing:

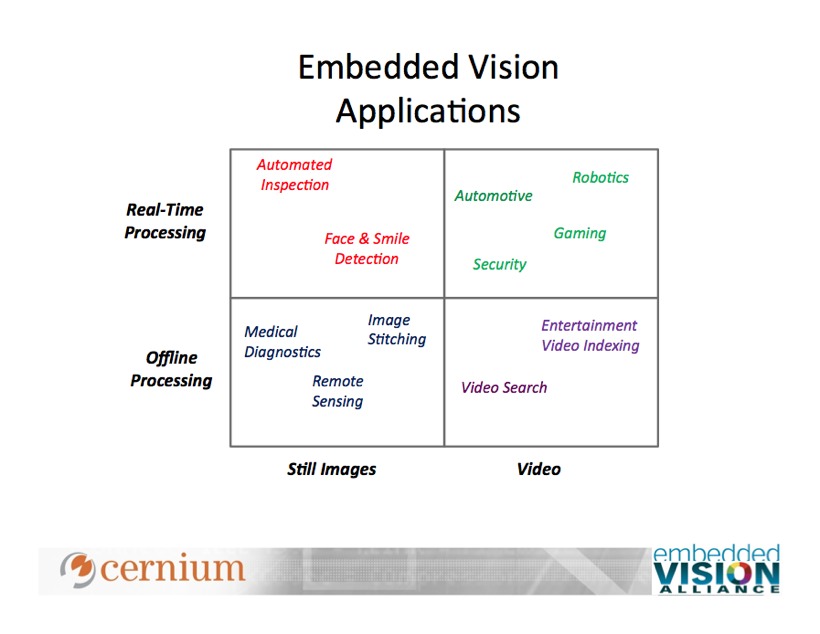

Regular visitors to the Embedded Vision Alliance website may recall Jeff Bier's three-part interview with UC Berkeley's Professor Jitendra Malik back in mid-August 2011. Many of the themes that Bier and Malik touched on in that session are echoed in Gagvani's next notable presentation graphic, which also neatly explains why Texas Instruments Gene Franz spends so much time working with academia, as he discussed with me last month:

As Gagvani's graphic shows, embedded vision development begins with core algorithm and other technology breakthroughs (many coming from research labs and other academic settings), proceeding from there to development frameworks, software applications, and finally hardware-plus-software products. Correlate them to the revenue line, however, and you'll see that the biggest business opportunities are at the right-most (product) end of the sequence.

Cernium, as my early-November writeup pointed out, has long been in the surveillance equipment business, beginning with large and expensive computer-based vision systems. The core rationale for Cernium's gear, which provides automated interpretation of surveillance camera inputs, is neatly summarized in Gagvani's following points:

- With the exception of web access, the ability of users to interact with surveillance video hasn’t changed in 50 years.

- You need to watch everything to see anything

- Human attention spans are limited

- Generally, nothing is happening

- Therefore, video monitoring is a major waste of time

However, Gagvani pointed out, historical efforts to combine detection technology with video monitoring were too expensive and/or fail to perform. Motion detection taught users that there’s a lot of uninteresting motion in the world. High end “smart” surveillance cameras and encoders typically cost more than $1000 per camera. And they were difficult to install and maintain, requiring technical knowledge and frequent analytics tuning.

Cernium's stab at a consumer surveillance solution, Archerfish, leveraged the fact that as the Embedded Vision Alliance website states, "a major transformation is underway. Due to the emergence of very powerful, low-cost, and energy-efficient processors [and image sensors], it has become possible to incorporate vision capabilities into a wide range of embedded systems." The Archerfish project had four high-level objectives:

- Deliver relevant, real-time video information…

- …on competitively priced, easy-to-install devices

- And make full use of users' mobile electronics gear for information access…

- …without cluttering mailboxes and storage equipment

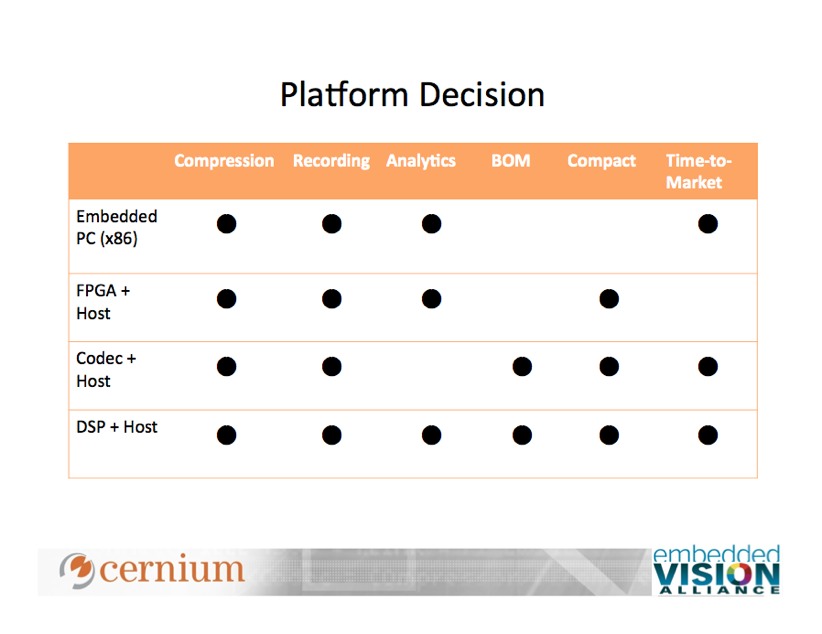

In its product planning process, Cernium came up with the following goals:

- Desired features including built-in video compression, video recording, advanced video analytics, wireless connectivity, and weatherproof construction

- A retail price under $300

- A cellphone-sized compact, novel form factor, and

- Time-to-market of under 12 months, from lab to store shelves

Next, Gagvani compared four different candidates for embedded vision processing (the company ended up going with the last one):

The insights that Gagvani and Cernium implemented in the Archerfish project sound like they come right out of an Apple marketing manual. While the "cool factor" may be enough to sell a few systems to techie early adopters, a broad market embrace requires that a product solidly address obvious and compelling consumer needs. To wit, the product needs to sell itself via clear and concise packaging, whether sitting on a retail shelf or listed in an online catalog. And the post-sales initial installation and setup experience, along with ongoing usage, must all also be rock-solid.

Gagvani then discussed seven key guidelines for embedded vision platform development, which resonated with the silicon- and software-supplier-dominated audience at the Member Summit. The top three items on his list were "software." He definitively stated that software sells the hardware, not the other way around, and that while optimized vision software component libraries are a great start, complete peripheral and driver stacks are necessary, along with informed and easily accessible support resources beyond those of a distributor (online forums, wikis, and community projects all work well, in his experience).

He reminded the audience that most embedded vision software began as a MATLAB or OpenCV proof-of-concept, transitioning from there (if initial experiments were promising) into a real-time personal computer-based implementation, and ultimately into an embedded implementation optimized for a particular platform. Pre-compiled, optimized software building blocks for vision are key, and high-level software APIs that abstract hardware greatly improve the odds of a successful embedded implementation. But a lack of standards causes confusion about which accelerated blocks to provide.

With respect to system memory architecture, Gagvani commented that embedded vision algorithms are not strictly feed-forward or single-function in nature. Pixels tend to get examined multiple times; similarly, intermediate images are revisited and reprocessed through the algorithm flow. Embedded implementations therefore require significant re-factoring to complete all pixel operations while data is on-chip, limiting algorithm choice and complicating algorithm-porting operations.

The optics, image sensor and ISP (image signal processor) are, in Gagvani's mind, the most critical hardware components of a vision system ("garbage in gives garbage out"). With respect to the ISP, and reflective of Apical's two-part technical article series on the topic, Gagvani explicitly noted that "tuning of the ISP for compression is often at odds with tuning for vision processing."

Finally, Gagvani discussed various peripheral functions, noting the following:

- Compression is concurrent with, not shared with, vision processing

- With respect to digital video and camera interfaces, while on the one hand flexibility is good, on the other hand "canned" out-of-box solutions for popular imagers also reduce risk and time-to-market

- Multiple USB host and/or OTG (on-the-go) ports are desirable…

- …as is a high-speed system bus to mate the system processor, co-processor, FPGA and/or custom logic, and

- Encryption support may also be required

I encourage you to review Gagvani's entire presentation (3.9 MByte PDF). Also, check out a video interview I conducted with Gagvani the evening before the December EVA Summit.