This blog post was originally published at ARM's website. It is reprinted here with the permission of ARM.

The Internet of Things began to mature in 2016 and we started to see evidence of how technology can help us to engage more powerfully with our world. By allowing machines, sensors and technologies to communicate intelligently through shared data it is becoming clear just how valuable IoT technologies might be across a variety of sectors. Home automation is one of the first things people think of but our attention can just as easily turn to smart agriculture, automated office management and remote monitoring and maintenance of vehicles and assets. Another high profile area generating headlines this year has been connected vehicles because of the technological challenges that must be addressed before autonomous cars can be unleashed onto the streets. Vision is one critical factor; your car needs to be able to identify all road hazards as well as navigating from A to B. So, how can a car achieve that in an often over-crowded highway space?

What is computer vision?

ARM®’s recent acquisition of Apical, an innovative, Loughborough-based imaging tech company, helps us to answer this question as Apical has brought industry-leading computer vision technology into the ARM product roadmap. So what exactly is computer vision? It has been described as graphics in reverse. Rather than us viewing the computer’s world the computer turns around to look at ours. The computer is able to see, understand and respond to visual stimuli.

In order to do this there are camera and sensor requirements. We have to take what is essentially just a graphical array of pixels and teach the computer to understand what they mean in context. We are already using examples of computer vision every day, possibly without even realising it. Ever used one of Snapchat’s daily filters? It uses computer vision to figure out where your face is and of course, to react when you respond to the instructions (like ‘open your mouth…’). Recent Samsung smartphones use computer vision too, a nifty little feature for a book worm like me is that it detects when your phone is in front of your face and overrides the display timeout so it doesn’t go dark mid-page. These are of course comparatively minor examples but the possibilities are expanding at breakneck speed and the fact that we already take these for granted speaks volumes about the potential next wave.

Road signs

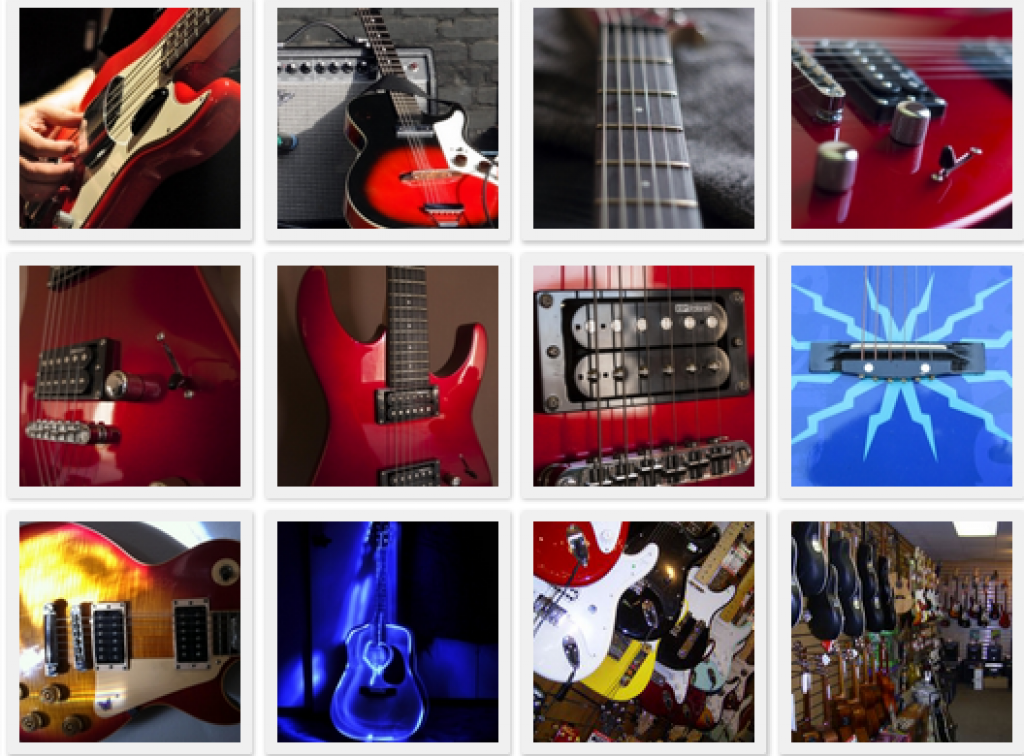

Computer vision is by no means a new idea, there were automatic number plate recognition systems as early as the 1960s but deep learning is one of the key technologies that have expanded its potential. Early computer vision systems were algorithm-based, removing the color and texture of a viewed object in favor of identifying basic shapes and edges, and narrowing down what they might represent. This stripped back the amount of data that had to be dealt with and enabled the processing power to be concentrated on the essentials. Deep learning flipped this process on its head, instead of algorithmically working out that a triangle of certain dimensions was statistically probable to be a road sign, why didn’t we look at a whole heap of road signs and learn to recognize them? Using deep learning techniques, the computer can look at hundreds and thousands of pictures, e.g., an electric guitar and start to learn what an electric guitar looks like in different configurations, contexts, levels of daylight, backgrounds and environments. As it sees so many variations it also begins to learn to recognize an item even when it is partially obscured because it knows enough to rule out the possibility that it is anything else. Sitting behind all this intelligence are neural networks, computer models that are designed to mimic our understanding of how the human brain works. The deep learning process builds up connections between the virtual neurons as it sees more and more guitars. With a neural net suitably trained, the computer can become amazingly good at recognising guitars, or indeed anything else it’s been trained to see.

The ImageNet competition tests how accurately computers can identify specific objects in a range of images

ImageNet competition

A key milestone for the adoption of deep learning was at the 2012 ImageNet competition. ImageNet is an online research database of more than 14 million images and runs an annual competition to pit machines against each other to establish which produces the fewest errors when asked to identify objects in a series of pictures. The first year a team entered with a solution based on deep learning in 2012. Alex Krizhevsky’s system wiped the floor with the competition that used more traditional methods and it started a revolution in computer vision. The world would never be the same again. The following year there were of course multiple deep learning models and Microsoft broke records recently when its machine was able to beat their human control subject in the challenge.

A particularly exciting aspect of welcoming Apical to ARM is their Computer Vision interpretation engine which takes data from video and a variety of sensors and produces a digital representation of the scene it’s viewing. This allows, for example, security staff to monitor the behaviour of a crowd at a large event or in public areas and raise an alert before overcrowding becomes an issue. It also opens the doors for vehicles and machines to begin to be able to process their surroundings independently and apply this information to make smart decisions and make their behaviour contextually aware.

We can simultaneously interpret different aspects of a scene from an overall digital representation

This shows us how quickly technology can move and gives some idea of the potential, particularly for autonomous vehicles as we can now see how precisely they could quantify the hazard of a child by the side of the road. What happens though, when a vehicle has a choice to make? It can differentiate between children and adults and assess that the child statistically holds the greater risk of running into the road. However, if there’s an impending accident and the only way to avoid it is to cause a different one, how can it be expected to choose? How would we choose between running into a bus stop crowded with people or a head-on collision with another car? By instinct, or perhaps through an internal moral code? Where does the potential of these machines to think for themselves become the potential for them to discriminate or produce prejudicial responses? There is, of course, a long way to go before we see this level of automation but the speed at which the industry is advancing suggests these issues, and their solutions, will appear sooner rather than later.

ARM’s acquisition of Apical comes at a time when having the opportunity to exploit the full potential of technology is becoming increasingly important. We intend to be on the front line of ensuring computer vision adds value, innovation and security to the future of technology and automation. Stay tuned for more detail on up and coming devices, technologies and the ARM approach to the future of computer vision and deep learning.

By Freddi Jeffries

Content Marketer, ARM