This blog post was originally published at Cadence's website. It is reprinted here with the permission of Cadence.

The opening keynote at the Embedded Vision Summit in Santa Clara was by Marc Pollefeys, who is a director of science at Microsoft in Redmond, working on advanced capabilities for Hololens, and a professor at ETH Zurich. I'm betting he has a lot of Swissair miles. Marc spent most of his time giving us a look under the hood of the Hololens and potential future applications. At $3,000 a pop, it is not priced for the mass market but as a research and development tool. However, Roger Walkden, Microsoft's commercial lead for the product said in January that they had sold "thousands, not hundreds of thousands…that's all we need."

If you get a chance to try the Hololens, then take it. We have a couple at Cadence and I spent 10 minutes trying it out. In fact I started out one of my What's for Breakfast? videos wearing it. It is different from virtual reality goggles in that the display is see through and you can see the real environment around you. The Hololens also senses the environment as you move around so that it knows where the walls and furniture are. This allows it to do things like put images on the wall, or bring up another person/avatar so that it appears that they are standing behind a real physical table. The interface uses a menu a bit like any laptop, although the pointing device are your eyes, the cursor moves where you look, and the mouse button is your finger. You look somewhat idiotic clicking your finger in mid-air but it works.

Microsoft calls the technology "mixed reality", since you can see the real world completely normally, and then the computing platform can throw up information and allow you to interact with it. Marc joined Microsoft to work on it and thinks it will be the next generation of personal computing devices, after PCs and smartphones. Of course the price will have to come down for that to happen, which is challenging given how much technology there is inside.In the Q&A at the end, he said it would take 5-10 years, just like it took to get from the first smartphone to the amazing capability that we have today.

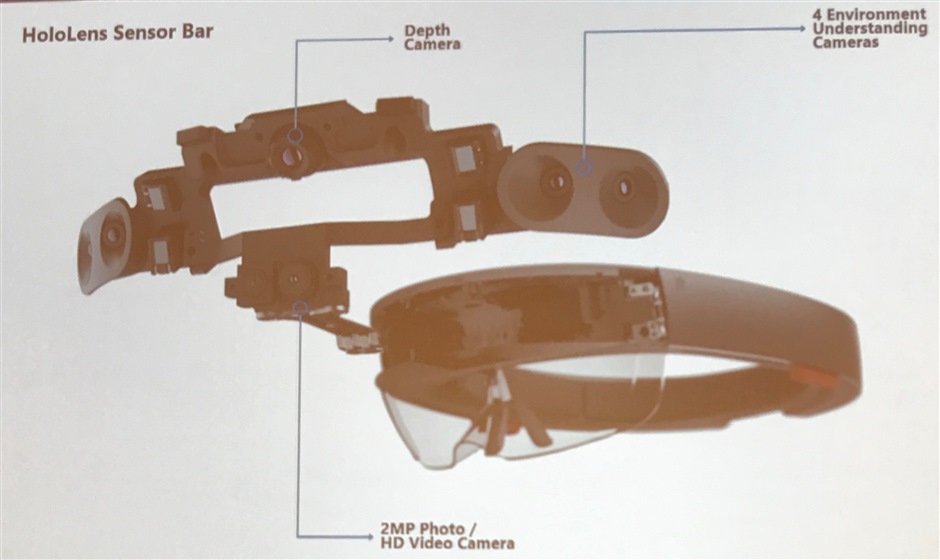

Cameras and Displays

The Hololens has a total of six cameras. Four environmental understanding cameras, two of which can also be used to give front-facing stereo vision. There is an additional depth camera. Then there is a 2MP photo and HD video camera. This camera is not used by the Hololens operating system itself, it is intended for applications to use.

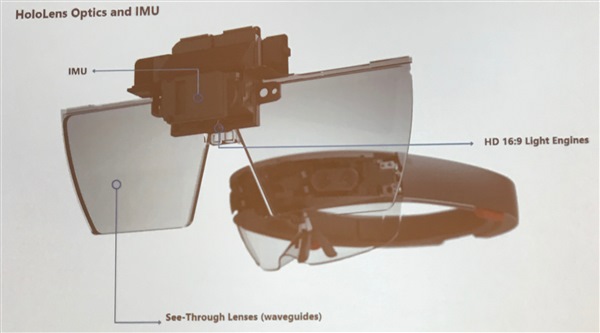

The "glasses" you look through are actually displays. They are also waveguides with 16:9 light engines to provide immersive graphics.There is also the IMU, the inertial measurement unit. This is an important part of the whole Hololens experience because there is absolutely no discernible latency between moving your head and the image tracking across the display, so that the effect to you is that it stayed in exactly the same place. The virtual reality headsets I have tried had noticeable lag. I never had a problem, but Jeff Bier, who started this conference as a little side project five years ago, said that Hololens is the first headset that didn't give him a headache after about 15 minutes.

There are also several microphones and also speakers, so that you can interact with objects outside the field of vision. If a person speaks to one side of you, for example, you can turn your head and there they are. Again, sound blends the actual environment around you, if a person talks to you then you can hear them, and also computer generated sound of any type. There is little difference interacting with a real person or a simulated person wearing another Hololens somewhere else. You can see and hear them as if they were there.

Processors

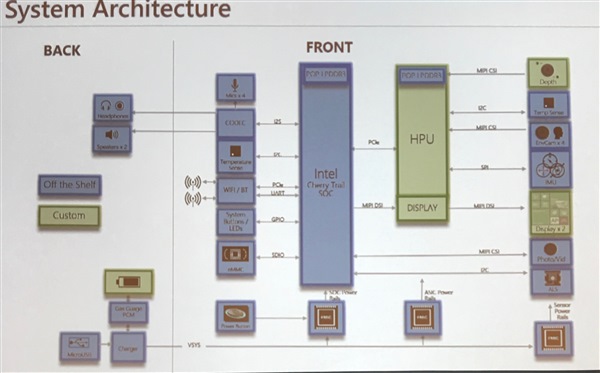

There are two processors running inside the Hololens. One is an Intel Cherry Trail x86 architecture processor running Windows 10. The other is the HPU, the holographic processing unit. This is actually a misnomer if you expect true 3D laser interference holograms. This is where those Tensilica processors are. The main logic board also contains 64GB of flash and 2GB of RAM (1 for the main CPU and one for the HPU). The block diagram is above, with the green boxes being proprietary Microsoft technology, and blue being off-the-shelf components.

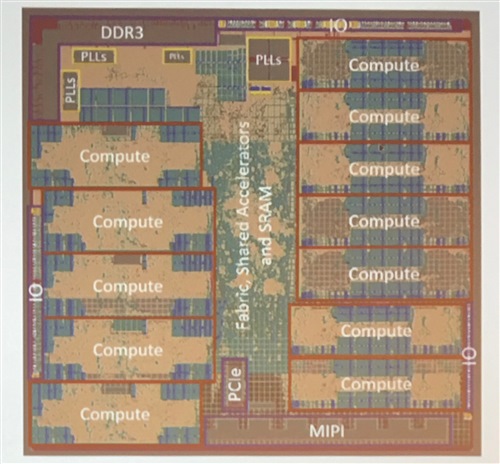

The picture above is a die photo of the HPU. It is built on TSMC 28nm HPC with about 65M logic gates, 8MB SRAM, in a 12mm square BGA package (at 0.4mm pitch). The DRAMs are in separate packages with 1GB LPDDR3 (also 0.4mm pitch). A lot of the processing goes on in the Hololens itself, so that the bandwidth to the cloud is actually small. There are standalone accelerators as well as accelerators tightly coupled to the Tensilica DSPs. This approach provides a speed up of 200X compared to just running on the Intel processor. The HPU, despite all those processors, actually has a lower power budget than the main Intel SoC, dissipating less than 10W.

Capability

The Hololens sensors and cameras mean that it basically understands the environment and what the user is doing in it. It has 6 degrees of freedom localization, visual inertial odometry, head tracking, gaze direction.

The depth perception camera uses infra-red. It projects IR out and then measures time of flight to detect how far away objects are. This is in addition to using two cameras as stereo to extract the scale of the environment. The environment is extracted and built up using multiple Hololens, and they go beyond a single session. It is possible for one person with a Hololens to leave a virtual object somewhere and for another person to see it and pick it up later. But things like the walls of the building are detected and uploaded to the cloud as people move around, and so there is no need for a map of the building to be supplied in advance. It seems to work really well, even with white walls lacking features, and in a building like the one where Marc works with very similar corridors and rooms on each floor. For the user interface, there is gesture and hand tracking.

One area of ongoing research is the split of work between the headset and the cloud. Applications can run on-device or partially ship data to the cloud. For example, because it keeps track of where you are in the environment, it can take an image, ship to the cloud, and then get the result. In the meantime, you have moved on, but it can adjust and put the label in the right place anyway. Marc had a video of walking around and the floor was being colored blue, walls red, furniture grey and so on, as they were recognized. But the identification was slotting in asynchronously having been done up in the cloud.

Scientific American 5 of the Best Designed Products Ever

The Hololens team was surprised when Scientific American picked Hololens as one of the five best designed products ever. It is an eclectic list since one of the other five is the humble paperclip, and another is the AT&T dial-phone.

From the outside, the Hololens looks uncomplicated. But when you see an exploded diagram of all its parts, you realize how much there is in it.

Learn More

There is a half-day course on building Hololens applications at CVPR in Honolulu in July. This will include examples of both on-device and off-device code. People will no doubt come up with ideas Microsoft haven't even considered, but for now the sweet spot seems to be deskless workers who interact with the real world and need their hands to be free. The videos that Marc showed had an elevator maintenance technician, a group of people planning the layout of a new store, and medical students looking at cadavers where parts could be made translucent.

The "application" I used was a game where insects appeared on the actual office walls and you had to shoot them. If they fired back, you could avoid getting hurt by ducking under the fireballs. It was a demonstration that used a lot of the technology: sensing the walls, making graphics appear merged with the real world, sensing where you looked, whether you ducked, adding sound for stuff happening behind you. When insects came through the wall, it really looked as if the wall had been burst open (somehow I don't think the Cadence offices actually have bricks in our walls, but…can't be perfect).

For more information on Tensilica Vision DSPs and the (announced that day) Vision C5 Neural Network DSP, see the Tensilica Vision DSP page.

By Paul McLellan

Editor of Breakfast Bytes, Cadence