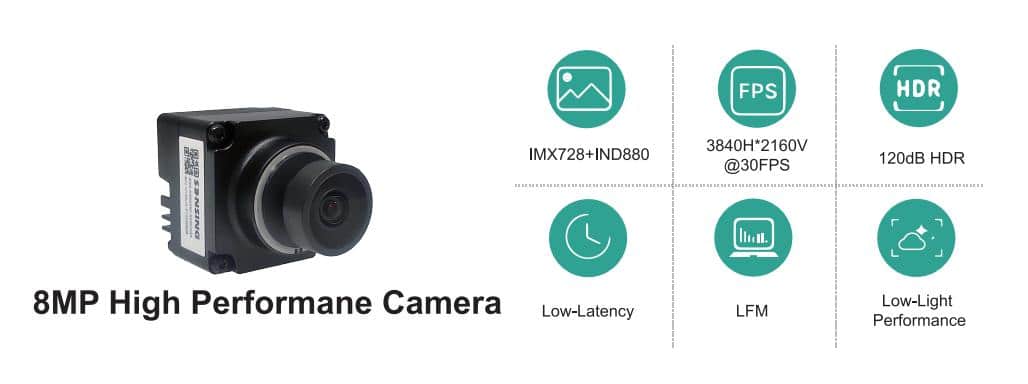

How Embedded Vision Is Shaping the Next Generation of Autonomous Mobile Robots

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Autonomous Mobile Robots (AMRs) are being deployed across industries, from warehouses and hospitals to logistics and retail, thanks to embedded vision systems. See how cameras are integrated into AMRs so that they can quickly and […]

How Embedded Vision Is Shaping the Next Generation of Autonomous Mobile Robots Read More +