This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications.

Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of embedded systems, enabling those systems to analyze their environments via video and still image inputs. Automotive ADAS (advanced driver assistance systems) designs are among the early success stories in the burgeoning embedded vision era, and their usage is rapidly expanding beyond high-end vehicles into high-volume mainstream implementations, and into a diversity of specific ADAS applications.

By Brian Dipert

Editor-in-Chief

Embedded Vision Alliance

Tim Droz

Vice President and General Manager, SoftKinetic North America

SoftKinetic

Stéphane Francois

Program Manager

CogniVue

and Markus Willems

Senior Product Marketing Manager, Processor Solutions

Synopsys

ADAS (advanced driver assistance systems) implementations are one key example of the exponential increase in both the overall amount and the functional capability of electronics hardware and associated software in automobiles, trends which forecasted to continue (if not accelerate) into the foreseeable future. Image sensor-based ADAS applications are becoming increasingly common, both standalone and as a supplement to other sensing technologies such as radar, LIDAR, infrared, and ultrasound. From its humble origins as a passive rear-view camera, visually alerting a driver to pedestrians and other objects behind the vehicle, vision-based ADAS has become more active (i.e. applying the brakes as necessary to prevent collisions), has extended to cameras mounted on other areas of the outside of a vehicle (for front collision avoidance purposes, for example), and has even expanded to encompass the vehicle interior (sensing driver drowsiness and distraction, implementing gesture interfaces, etc.)

What's fueling this increasing diversity of both per-vehicle camera counts and uses? It's now practical to incorporate computer vision into a wide range of systems, enabling those systems to analyze their environments via video and still image inputs. Historically, such image analysis technology has mainly been found in complex, expensive systems, such as military equipment and manufacturing quality-control inspection systems. However, cost, performance and power consumption advances in digital integrated circuits such as processors, memory devices and image sensors, along with the emergence of robust software algorithms, are now paving the way for the proliferation of visual intelligence into diverse and high-volume embedded system applications such as automobiles. As cameras deliver higher frame rates and the processors connected to them become increasingly powerful, the combination of the two is able to simultaneously tackle multiple vision processing tasks previously requiring numerous camera-plus-processor combinations. This multi-function integration reduces overall cost and power consumption, both factors critical in ADAS applications.

By "embedded system," we're referring to any microprocessor-based system that isn’t a general-purpose computer. Embedded vision, therefore, refers to the implementation of computer vision technology in embedded systems, mobile devices, special-purpose PCs, and the cloud, with ADAS implementations being one showcase application. Embedded vision implementation challenges remain, but they're more easily, rapidly, and cost-effectively solved than has ever before been possible. Reflective of this fact, vision-based ADAS implementations are not only rapidly multiplying in per-vehicle count but also rapidly expanding beyond high-end vehicles into high-volume mainstream implementations, and into a diversity of specific ADAS applications. And an industry alliance comprised of leading product and service suppliers is a key factor in this burgeoning technology success story.

Object Detection

Object detection is a base technology that applies to a wide range of ADAS applications. The complexity involved in object detection depends heavily on the traffic situation, along with the type of object to be detected. Stationary applications allow for short-range sensors such as ultrasound, while dynamic applications call for radar or camera-based sensors. Detecting metal-based objects such as vehicles is feasible with radar-based systems, but robustly detecting non-metal objects such as pedestrians and animals necessitates camera-based systems (Figure 1). The subsequent driver warning may take the form of a dashboard visual notification, an audible tone, and/or other measures.

Figure 1. Pedestrian detection algorithms are much more complex than those for vehicle detection, and a radar-only system is insufficient to reliably alert the driver to the presence of such non-metallic objects.

Blind-spot detection, a specific object detection implementation, helps to avoid incidents related to objects in areas outside of the vehicle where the driver is unable to see. Stationary, slow-moving and fast-moving applications of the concept all exist. Parking distance discernment is a well known albeit simple example of a blind-spot detection and warning system, historically based mostly on ultrasound sensors. More recently, camera-based systems have been deployed, such as the surround-view arrangement offered by BMW, wherein the input from multiple cameras is combined to provide the driver with a "bird's eye" view of the car and its surroundings.

For fast-moving scenarios, blind-spot object detection finds use when the vehicle is changing lanes, for example. It is similar in concept to a lane-change warning (covered next), but with specific attention on the left and right regions of the vehicle. Historically based on radar technology, it minimally issues a warning if an object is detected on either side of the vehicle. Implementations may extend to active intervention (discussed in more detail later in this article), as with Infiniti’s M-series cars. Government legislation may stimulate wide deployment, especially for commercial vehicles, since blind-spot related accidents in urban environments account for the majority of serious and fatal accidents involving cyclists and pedestrians. Extending blind-spot detection beyond discerning metallic objects to detecting pedestrians, animals and the like requires camera-based systems implementing complex algorithms.

Lane Change Notification

Drifting out of a given traffic lane is a common-enough problem to have prompted road safety agencies to mandate grooves in the pavement, i.e the so-called “rumble” strip, to alert drivers. However it is unacceptably expensive to add and maintain grooves on all roads, so an alternative is to have the vehicle use an embedded vision system to constantly track its position in the lane. Commonly known as a LDWS (lane departure warning system), the lane-change notification relies on one or multiple forward-facing cameras to capture images of the roadway ahead. Vision processing analyzes the images to extract the pavement markings and determine the position of both the lanes and vehicle.

The LDWS then informs the driver whenever the vehicle is moving out of its lane, in combination with turn signals that haven’t been activated. Passive warning can include haptic clues, such as a vibrating steering wheel that mimics the familiar feel of the rumble strip. And beyond just warning the driver, active safety systems (discussed next) include lane-keeping features that override the driver's steering wheel control, correcting the vehicle's direction and thereby maintaining lane placement.

Active Collision Avoidance

Active collision avoidance is one of the hottest topics in ADAS, as it is expected to have a significant positive impact on overall traffic security both in preventing accidents and in reducing the impact of an accident. It involves not only detecting dangerous situations, as discussed earlier, but also taking specific actions in response. These actions may range from the activation of security systems such as boosting the braking power and tightening the safety belts, all the way to autonomous emergency braking.

Using rear-end accident avoidance as an example, Volvo implemented an automatic braking system with its XC60s series, which resulted in an insurance claim decrease of 27% as compared to the same model and similar SUVs without this technology, according to a study conducted by the HLDI (Highway Loss Data Institute) (Reference 1). Insurance companies have consequently begun offering discounts for vehicles that are equipped with this feature; many vehicles today also offer front collision avoidance systems.

Active collision avoidance sensor systems historically were exclusively radar-based, but are gradually being enhanced and complemented by cameras (often 3D depth-sensing ones). Camera-based systems come into play whenever desired detection extends beyond other vehicles to include more general objects, with pedestrian detection being the most prominent example. Pedestrian detection algorithms are much more complex than those for vehicle detection, as it is necessary (for example) to scan not only the road but also the road boundaries. The 2013 Mercedes S-class applies stereoscopic cameras to detect pedestrians, generating a three-dimensional view for 50m in front of vehicle, and monitoring the overall situation ahead for about 500m. Pedestrian detection is active up to 72km/h (45mph) and is able to completely avoid collisions through autonomously braking in cases where the vehicle's initial speed is up to 50km/h (31mph).

Government legislation, as previously mentioned, is fueling the deployment of active collision avoidance systems due to their notably positive safety impacts. Effective November 1, 2013, for example, the European Union requires all new versions of commercial vehicles to be equipped with active forward collision avoidance systems, including autonomous braking. Once fully deployed, such systems are expected to prevent up to 5,000 fatalities and an additional 50,000 serious injuries per year.

Adaptive Cruise Control

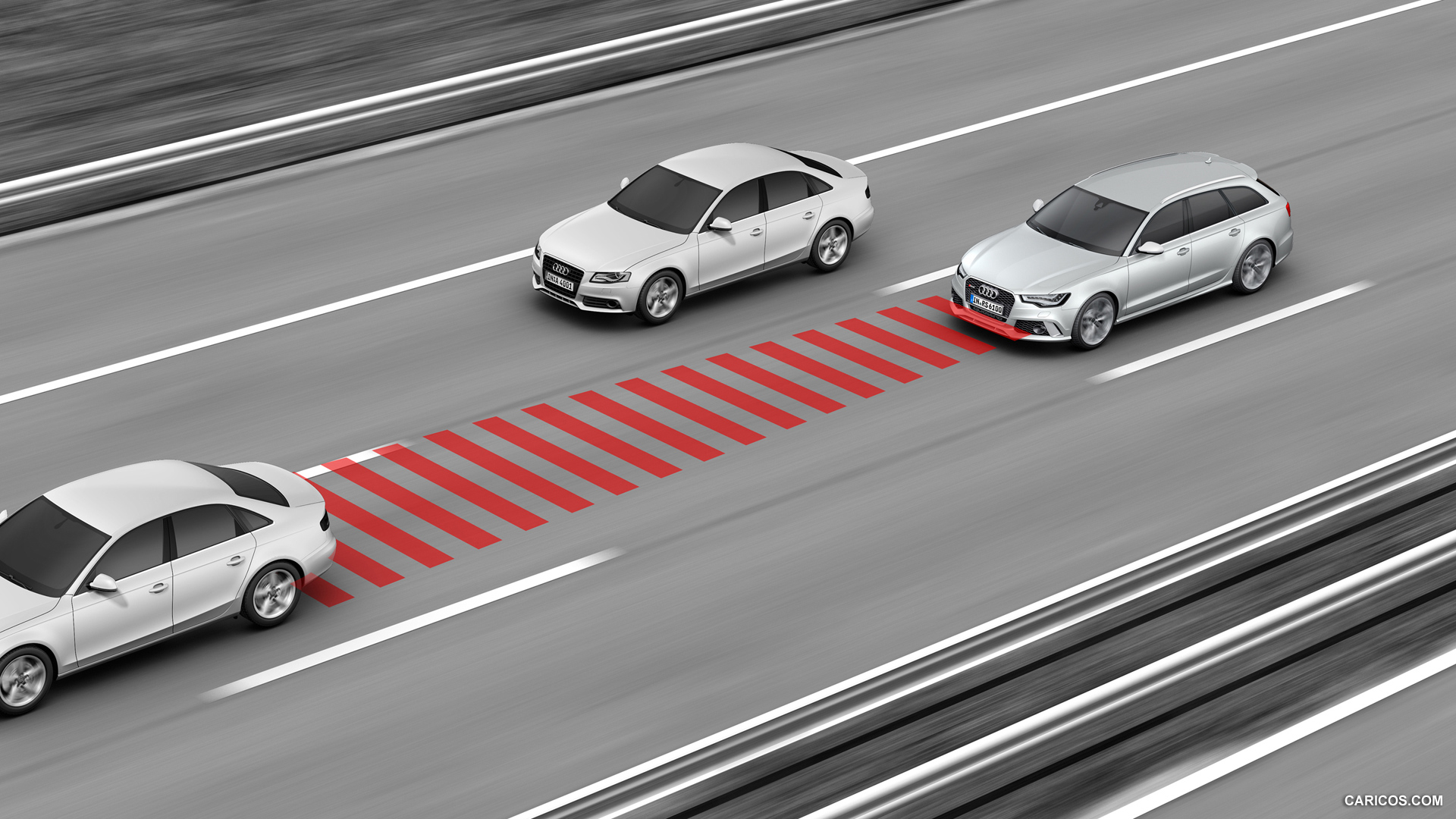

ACC (adaptive cruise control) is an intelligent form of cruise control that automatically adjusts a vehicle's speed to keep pace with traffic ahead (Figure 2). In most cases, ACC is radar-based today, although camera-based and camera-enhanced systems are also available and will become increasingly common in the future. Audi, for example, offers ACC for a speed range up to 200 km/h (124mph), useful on German autobahns. More generally, ACC is helpful in scenarios such as every-day rush-hour traffic, especially on highways, where ACC systems enable near-autonomous driving. Advanced camera-based ACC systems also consider traffic sign recognition-generated data, discussed next, in adjusting the vehicle's pace.

Figure 2. Adaptive cruise control automatically adjusts a vehicle's speed to keep pace with traffic ahead, and can incorporate camera-captured and vision processor-discerned speed limit and other road sign data in its algorithms.

Road Sign Monitoring

Are speeding tickets a relic of the past? ADAS technology aspires to make this scenario come to pass. Speed is regularly identified as one of the primary causes of accidents. Vision technology is now assisting drivers by alerting them when the vehicle is above speed limits; it can also adjust the vehicle speed automatically if the driver takes no action in response. This advanced form of adaptive cruise control is yet another step on the road to autonomous vehicles (Figure 3).

Figure 3. Many of the ADAS technologies discussed in this article are stepping stones on the path to the fully autonomous vehicle of the (near?) future.

The forward-facing camera sends images to the ADAS processor, which detects and reads road signs using advanced classifier algorithms. TSR (traffic sign recognition) can be a challenging embedded vision problem, especially with new LED-based signs that exhibit high frequency flickering. Signs can also be occluded or unclear due to poor weather or lighting conditions. All of these issues impose a requirement for high frame rates and high resolutions: 1 Mpixel in systems now being designed, going to 2 Mpixels and higher in the near future.

Face Recognition

"Where are the car keys?" is a question that drivers may not need to worry about for much longer, if our cars begin recognizing us via next-generation embedded vision systems. Smartphones, tablets, computers, smart TVs and game consoles already use face recognition to log us in and customize our user settings. The same conveniences we expect with consumer products are propagating to our vehicles too, and the possibilities are exciting.

For example, once the vehicle recognizes your face as you approach it and unlocks itself in response, it can also customize driver-specific preferences such as seat and mirror positions, radio stations and wireless communications settings. Vision technology for vehicles has up to now been focused on safety. However, embedded vision applications in automotive based on face recognition will soon also provide a range of driver convenience features.

Driver and Passenger Position and Attention

To effectively monitor and react to an impending emergency situation, discerning both the driver's position and visual perspective are critical. If a driver isn’t looking at a vehicle stopped ahead, for example, or his foot isn’t close to the brake while coming up on a pedestrian, the vehicle can either alert him or proactively take control of the brake systems in order to prevent a potentially lethal collision. And if a collision is imminent, despite measures taken to preclude it, precise discernment of the driver and passengers' body positions is useful in optimally deploying air bags.

Depth-sensing camera technology enables accurate monitoring of the driver’s head position as an aid to determining gaze direction, and can also ascertain more general body position for driver and passengers alike. Gaze- tracking technology monitors a driver's eye locations and orientations, and can even operate through sunglasses. Using another 3D image sensor, the vehicle ADAS system can tell if the driver’s legs and feet are in the proper position to apply the brake. Head-tracking 3D cameras can also be used to provide a perspective-correct HUD (heads-up display) overlay of the scene in front of the driver, increasing the HUD's richness and prompting more accurate and timely driver response.

Gesture Interfaces

Despite numerous attempts to use technology to make operating a vehicle safer, driving has become an even more distraction-filled experience. IVI (in-vehicle infotainment) systems offer expanded built-in media and cell phone services, while climate control functions are moving from knob and button-based controls to on-screen menu selections. All of this capability requires a driver to interact constantly with the in-dash display.

3D camera-based gesture recognition technologies are able to accurately track hand and finger movements as close as six inches away, allowing a driver to control the cockpit systems with a simple wave of the hand or lift of the finger. This ability enables the driver to remain focused on the road ahead while still navigating the wide variety of available functions, including phone, navigation, temperature and airflow, and music and other entertainment sources.

Other Opportunities

As previously mentioned, the increasing resolutions and frame rates of image sensors, along with the increasing performance of vision processors, are enabling a single camera-plus-processor embedded vision system to simultaneously tackle several functions. The same interior camera that discerns a driver's head and body position, for example, can be used to implement gesture control. And the same front- and rear-mounted external cameras employed for collision avoidance, adaptive cruise control, lane-change notification and road sign monitoring functions can also find use in autonomous parking systems. Autonomous parking, building on the foundation of now-widespread passive distance detection systems, actively takes over steering and braking functions in order to navigate a vehicle into a parking space. As with many of the other technologies discussed in this article, it's another important step on the path to the fully autonomous vehicle.

And what else can front-mounted cameras find use for? Object detection can find use not only for avoiding collisions but also in temporarily decreasing headlight intensity in order to avoid blinding drivers of oncoming vehicles, pedestrians and animals, for example. And Mercedes-Benz has come up with another clever implementation; after a stereo camera array installed in the vehicle senses irregularities in the road surface ahead, the Magic Body Control system (the company's brand name for active suspension) automatically adjusts the springs and shock absorbers to dampen the impact. As a video published by the company makes clear, the results can be dramatic (Reference 2).

Case Studies

The automotive industry has already delivered initial-generation ADAS systems. Now the primary focus is on adding features and driving down cost. Reducing cost makes ADAS more widely available not only into niche luxury vehicles but also all the way to high-volume entry-level vehicles. Under pressure to find ways to implement these highly advanced, computationally intensive systems without driving up costs, automotive subsystem suppliers and OEMs have been working with semiconductor companies to define power-optimized and integrated solutions. The resulting SoCs sometimes include dedicated vision-processing cores.

Ideally, automakers want to leverage a common "smart" processing platform combining vision (both visible and infrared), radar and ultrasonic sensor information. The processor platform ideally should scale with different feature sets across various vehicle classes, and enable automakers to re-use as much as possible of the algorithm and application code base. These attributes are feasible with a scalable family of processors or processor platform designs. The specific components used in upcoming ADAS systems are highly confidential, so a detailed review of leading-edge implementations is not possible, but it is interesting to look at some of the more recent systems that have already been introduced as an indication of what's coming next.

So far, this article has highlighted numerous current implementations of various ADAS concepts. Here are several additional case studies for your consideration. With the Euro NCAP (European New Car Assessment Program), for example, the 5-star rating will be dependent on the introduction of such features as autonomous emergency braking and lane departure warning. Along with these functions, as previously mentioned, a forward-facing camera system also supports other ADAS capabilities such as intelligent high-beam control and road sign detection.

Subaru

Subaru recently announced its latest stereo camera system, developed by Fuji Heavy Industries and called “EyeSight.” This system is installed on the inside of the front windshield, ahead of the rear view mirror. Since EyeSight's stereo camera system can accurately assess vehicle-to-object distance, it can meet a number of new safety requirements, such as NCAP. For example, EyeSight offers advanced “Pre-Collision Braking Control” and “All-Speed Range-Adaptive Cruise Control System” features that Subaru claims will significantly reduce collision risk at all speeds, particularly in slow or congested traffic.

When an EyeSight-equipped car gets close to another object (vehicle, cyclist, pedestrian etc), Pre-Collision Braking Control activates an alarm to warn the driver of the potential hazard. If the speed difference between the Subaru and the object ahead is below 30 km/h and the Subaru driver does not immediately brake in response to the alarm, EyeSight then automatically slows the car. If the speed difference between the driver’s vehicle and the object in front is more than 30km/h, on the other hand, EyeSight will automatically apply the vehicle's brakes. And all-speed Adaptive Cruise control enables EyeSight-equipped cars to monitor and adjust their speed in order to maintain a safe distance between them and vehicles ahead.

Volvo

Volvo's CWAB (Collision Warning with Auto Brake) was introduced on the S80 model vehicle. CWAB is equipped with a radar-plus-camera "fusion" system and provides driver warning through a HUD that visually resembles a pair of brake lamps. If the driver does not immediately react in response to the alarm, the system pre-charges the brakes and increases brake-assist sensitivity in order to maximize braking performance. The latest CWAB versions will even automatically apply the brakes to minimize vehicle, pedestrian and other object impacts. Volvo has a long history of safety innovations; the company introduced the first cyclist detection system in 2013, and the V40 also included the world's first pedestrian airbag

The BLIS (Blind Spot Information System), which was originally developed by Volvo, has subsequently been adapted by Ford to use in the Ford Fusion Hybrid, Mercury Milan, and Lincoln MKZ. This system, using two door mounted cameras and a centralized ECU (electronic control unit), generates a visible alert of an impending collision when another car enters the vehicle's blind spot while the driver is switching lanes. Mazda has introduced a similar safety feature called BSM (Blind Spot Monitoring), which is now standard on the CX-9 Touring and Grand Touring models in 2013, as well as being a trim level option on the Mazda-6 I Touring Plus and some versions of the Mazda-3 and CX-5. Mazda has also proposed a cost- and power-optimized implementation with "smart" camera modules inserted into the side mirrors, which do image pre-processing and thereby send only metadata to the ECU, rather than raw video data.

The Embedded Vision Alliance

Embedded vision technology has the potential to fundamentally enable a wide range of ADAS and other electronic products that are more intelligent and responsive than before, and thus more valuable to users. It can also add helpful features to existing products, such as the vision-enhanced ADAS implementations discussed in this article. And it can provide significant new markets for hardware, software and semiconductor manufacturers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform this potential into reality. CogniVue, SoftKinetic and Synopsys, the co-authors of this article, are members of the Embedded Vision Alliance.

First and foremost, the Alliance's mission is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into new and existing products. To execute this mission, the Alliance maintains a website providing tutorial articles, videos, code downloads and a discussion forum staffed by a diversity of technology experts. Registered website users can also receive the Alliance’s twice-monthly email newsletter, among other benefits.

In addition, the Embedded Vision Alliance offers a free online training facility for embedded vision product developers: the Embedded Vision Academy. This area of the Alliance website provides in-depth technical training and other resources to help engineers integrate visual intelligence into next-generation embedded and consumer devices. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools such as OpenCV. Access is free to all through a simple registration process.

The Embedded Vision Summit

On Thursday, May 29, 2014, in Santa Clara, California, the Alliance will hold its fourth Embedded Vision Summit. Embedded Vision Summits are technical educational forums for engineers interested in incorporating visual intelligence into electronic systems and software. They provide how-to presentations, inspiring keynote talks, demonstrations, and opportunities to interact with technical experts from Alliance member companies. These events are intended to:

- Inspire engineers' imaginations about the potential applications for embedded vision technology through exciting presentations and demonstrations.

- Offer practical know-how for engineers to help them incorporate vision capabilities into their products, and

- Provide opportunities for engineers to meet and talk with leading embedded vision technology companies and learn about their offerings.

The Embedded Vision Summit West will be co-located with the Augmented World Expo, and attendees of the Embedded Vision Summit West will have the option of also attending the full Augmented World Expo conference or accessing the Augmented World Expo exhibit floor at a discounted price. Please visit the event page for more information on the Embedded Vision Summit West and associated hands-on workshops, such as detailed agendas, keynote, technical tutorial and other presentation details, speaker biographies, and online registration.

References:

- http://www.iihs.org/iihs/news/desktopnews/high-tech-system-on-volvos-is-preventing-crashes

- http://www5.mercedes-benz.com/en/innovation/magic-body-control-s-class-suspension/

Brian Dipert is Editor-In-Chief of the Embedded Vision Alliance. He is also a Senior Analyst at Berkeley Design Technology, Inc., which provides analysis, advice, and engineering for embedded processing technology and applications, and Editor-In-Chief of InsideDSP, the company's online newsletter dedicated to digital signal processing technology. Brian has a B.S. degree in Electrical Engineering from Purdue University in West Lafayette, IN. His professional career began at Magnavox Electronics Systems in Fort Wayne, IN; Brian subsequently spent eight years at Intel Corporation in Folsom, CA. He then spent 14 years at EDN Magazine.

Tim Droz heads the SoftKinetic U.S. organization, delivering 3D TOF (time-of-flight) and gesture solutions to international customers, such as Intel and Texas Instruments. Prior to SoftKinetic, he was Vice President of Platform Engineering and head of the Entertainment Solutions Business Unit at Canesta, subsequently acquired by Microsoft. His pioneering work extends into all aspects of the gesture and 3D ecosystem, including 3D sensors, gesture-based middleware and applications. Tim earned a BSEE from the University of Virginia, and a M.S. degree in Electrical and Computer Engineering from North Carolina State University.

Stephane Francois is Program Manager at CogniVue, with more than 15 years of experience in vision technology as a user, support engineer, supplier and business manager. He has a Master’s Degree in Computer Science from Ecole Nationale d’Ingenieurs de Brest, France and a certified Vision Professional – Advanced Level from the Automated Imaging Association, USA.

Markus Willems is currently responsible for Synopsys' processor development solutions. He has been with Synopsys for 14 years, supporting various system-level and functional verification marketing roles. He has worked in the electronic design automation and computer industries for more than 20 years in a variety of senior positions, including marketing, applications engineering, and research. Prior to Synopsys, Markus was product marketing manager at dSPACE, Paderborn, Germany. Markus received his Ph.D. (Dr.-Ing.) and M.Sc (Dipl.-Ing.) in Electrical Engineering from Aachen University of Technology in 1998 and 1992, respectively. He also holds a MBA (Dipl.Wirt-Ing) from Hagen University.